Building ProMind 2.0: AI Assistants running on ChatGPT

Discover how ProMind 2.0, an AI assistant app built with Ionic+React, NodeJS, FastAPI, and OpenAI API integration, can help you generate content

As a software engineer, I have been involved in building some amazing applications. My latest pet project harnesses the power of OpenAI GPT models, to provide users with AI assistants for different tasks. This is part 2 of my previous blog post, and I will be sharing my experience building ProMind 2.0.

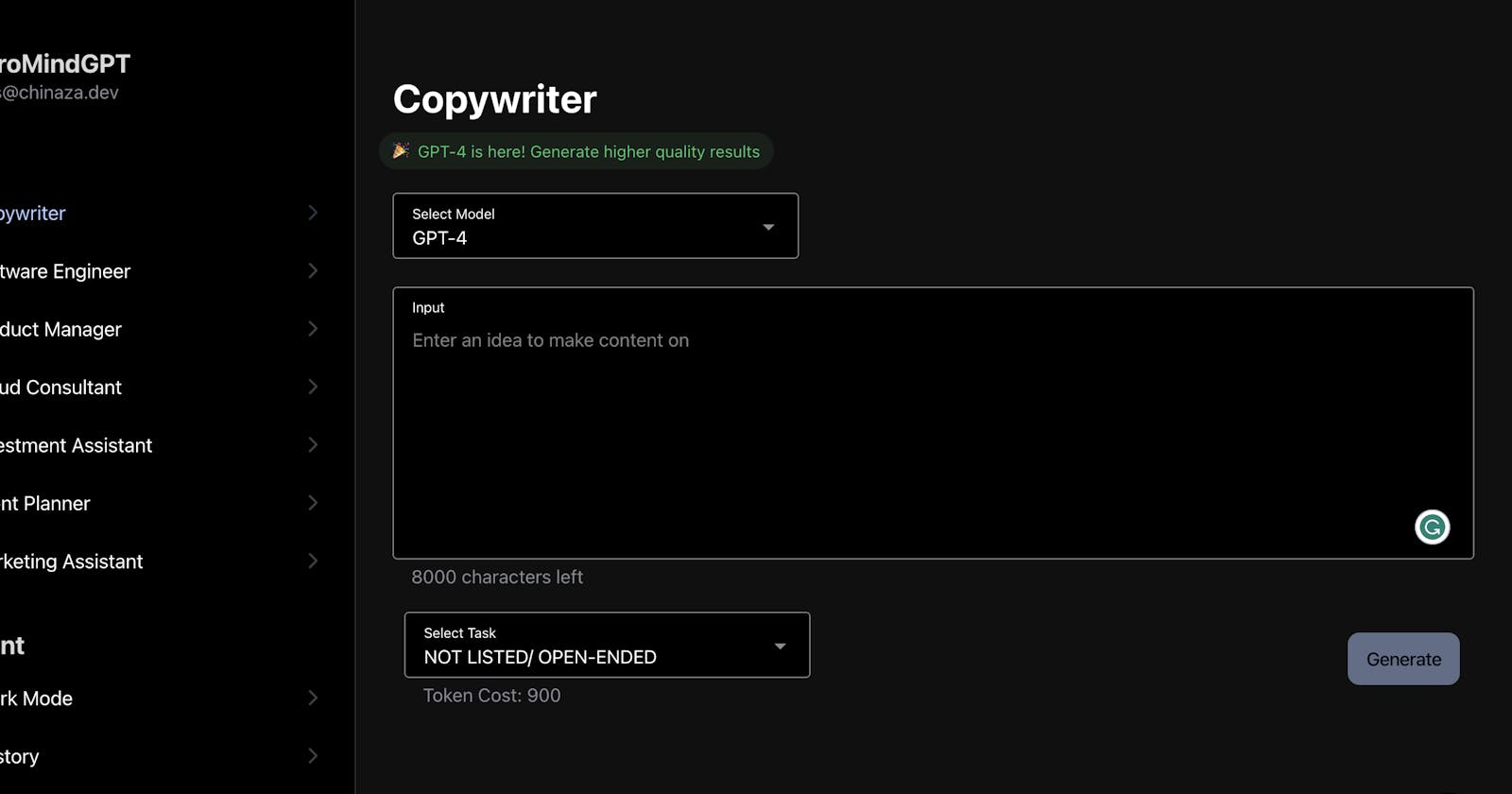

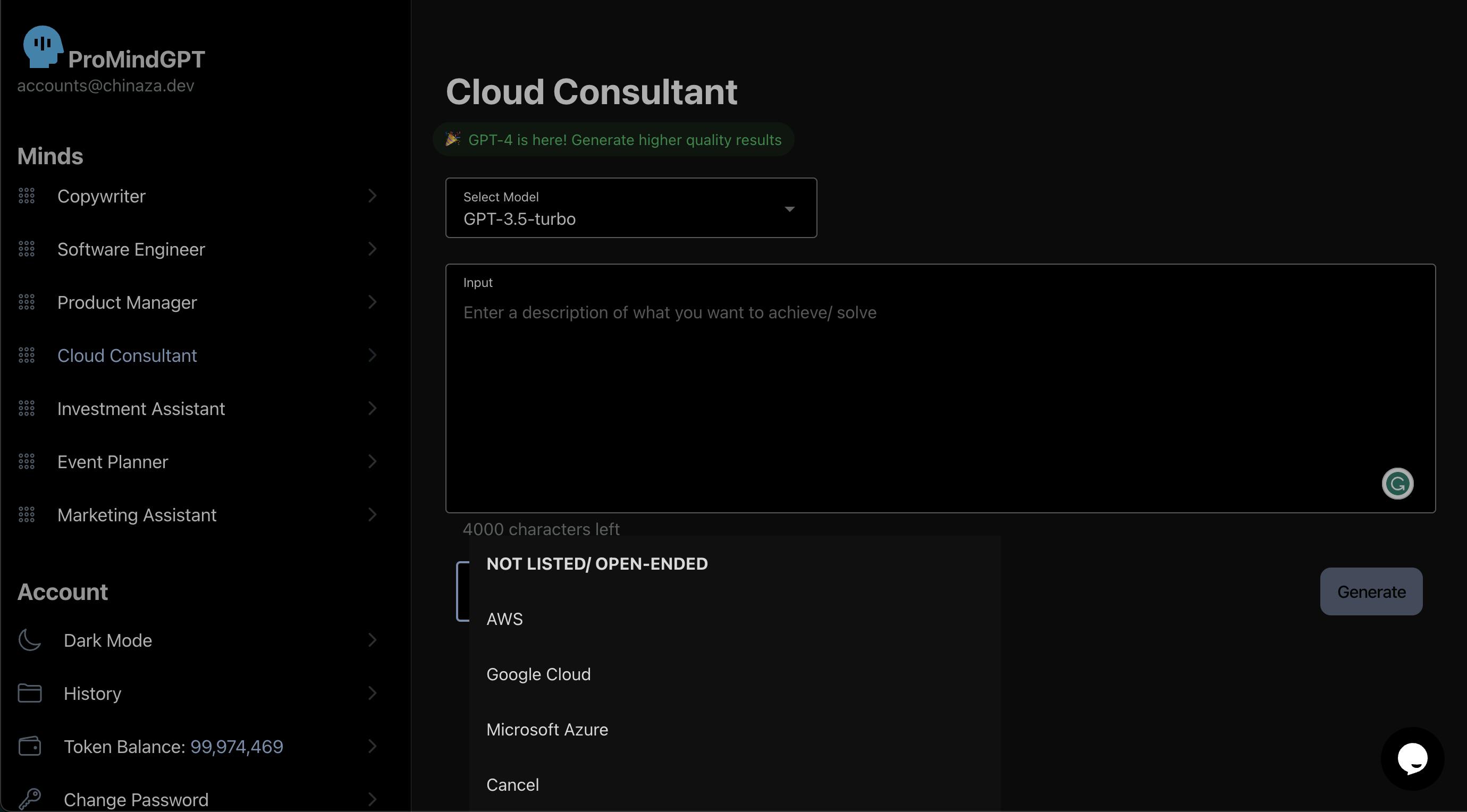

Promind.ai allows you to generate content with AI in just a few clicks. The app works by creating what I call "minds," which are groups of AI assistants. Currently, the app has several minds, including a Copywriter, Software Engineer, Marketing Assistant and more.

Each mind is designed to help you with specific tasks related to that field. For example, if you click on the Cloud Consultant mind, you will be presented with a user interface to select a task, such as AWS, and specify an input, which is essentially an idea, question or creative direction for the model.

Once you have entered your input and selected your task, you can click on the generate button. This will trigger an API call to OpenAI chat completion API, which uses the selected GPT-3.5-turbo or GPT-4 model to generate results.

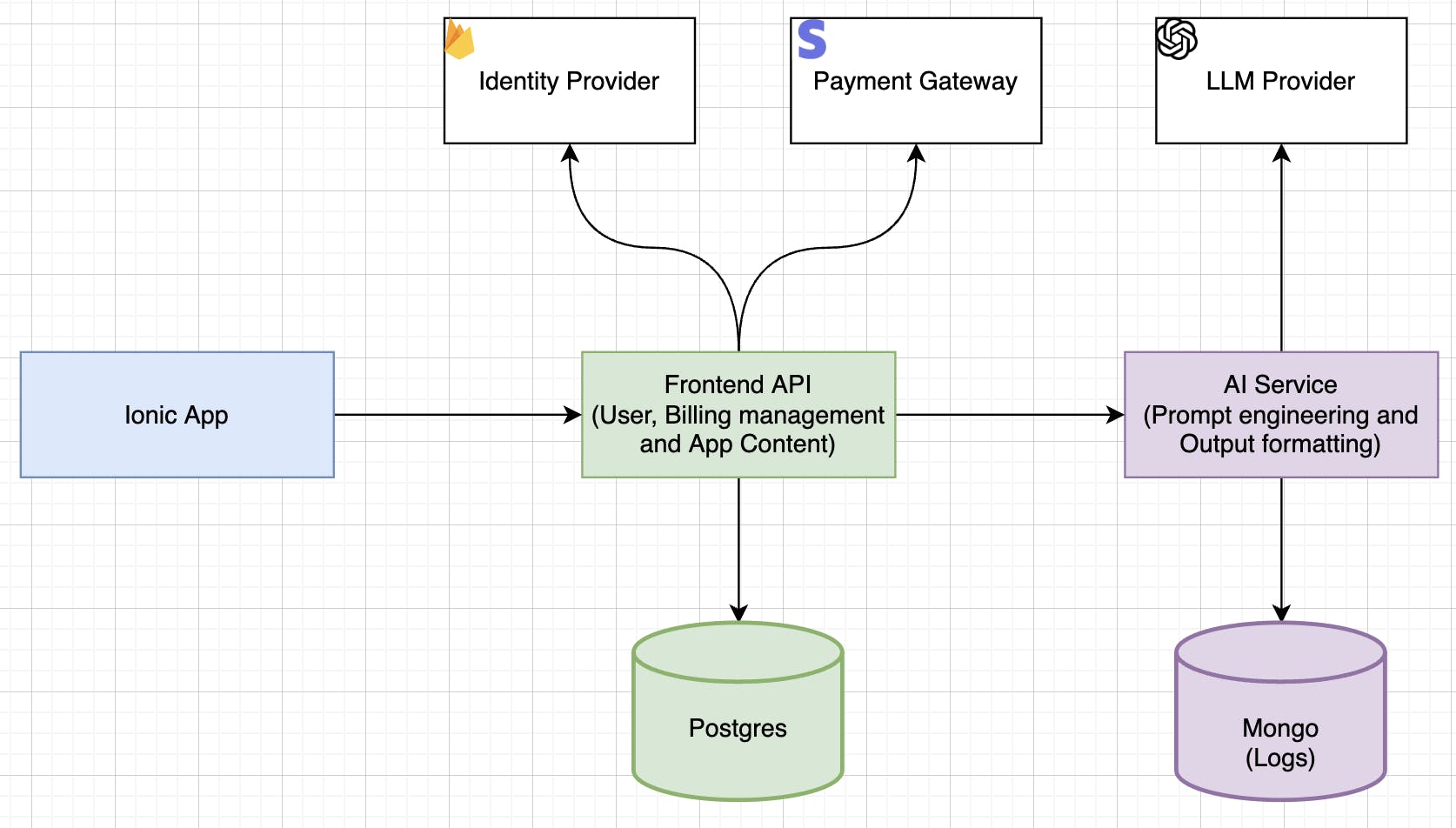

To create such an amazing app, I had to consider several factors. One of the most important aspects was the system design and architecture. The first version was built with SolidJS, NodeJS, FastAPI with OpenAI API integration, and Firebase for authentication. The latest version currently runs on Ionic+React.

Ionic allows us to have a single codebase for web, mobile, and desktop, which reduces the overhead of maintenance and updating of the app. NodeJS and FastAPI are being used to handle user sessions, payment gateway integration, as well as API calls to OpenAI chat completion API. This ensures that the app runs fast and efficiently.

Prompt engineering is another crucial aspect of the app. I had to ensure that the prompts were well-designed and broadly captures the possible requests and inputs from users. This has helped generate fairly accurate and relevant results.

If you're looking to build an app like Promind.ai, there are several factors to consider. One of the most important things to keep in mind is the model you are using. It's generally recommended to use the latest and most capable models for the best results. As of March 2023, the best options are the "gpt-3.5-turbo" and "gpt-4" models. Another crucial aspect of prompt engineering is to ensure that the prompts are well-designed and broadly capture the possible requests and inputs from users. One way to achieve this is to put instructions at the beginning of the prompt and use ### or """ to separate the instruction and context. This helps the model understand what you are looking for and generate more accurate and relevant results. When specifying the context, outcome, length, format, style, etc., be specific, descriptive, and as detailed as possible. It's also helpful to articulate the desired output format through examples. For instance, if you want to extract important entities from a text, specify the desired format for the output, such as company names, people names, specific topics, and general themes.

Building Promind.ai has been an incredible experience. It has allowed me to explore new technologies and develop my skills as a software engineer. The app has the potential, to revolutionize the way we generate content and solve various problems related to different fields. I am excited about the future of Promind.ai and can't wait to see what you build with it.